Everything you do online—from that blurry selfie you just sent to the high-def movie you’re streaming—boils down to a series of flicking switches. That’s it. It’s not magic. It’s just electricity moving through silicon. But if you’ve ever wondered why your 100 Mbps internet connection doesn't actually download a 100 MB file in one second, you’ve hit the confusing wall of bits and bytes.

The distinction is tiny. Yet, it's everything.

Basically, we live in a world defined by these two units, and honestly, they get mixed up constantly. Even by tech people who should know better. A bit is the smallest possible grain of sand in the digital desert. A byte? That’s more like a small pebble. Understanding the difference isn't just for computer science majors; it’s for anyone who wants to stop overpaying for cloud storage or wondering why their "fast" internet feels like a snail on a bad day.

The Bit: It's Just a Light Switch

Think of a bit as a single light switch. It can be on, or it can be off. In computer-speak, we call that a 1 or a 0. That’s binary. It is the fundamental building block of every piece of data ever created.

If you have one bit, you can only represent two things. Yes or no. True or false. Black or white. But when you start stringing these bits together, the possibilities don't just add up; they explode. It’s exponential. Two bits give you four combinations (00, 01, 10, 11). Three bits give you eight. By the time you get to a bunch of them, you can describe the complex colors of a sunset or the specific frequency of a Taylor Swift song.

Claude Shannon, often called the father of information theory, was the guy who really formalized this. In his 1948 paper, "A Mathematical Theory of Communication," he laid the groundwork for how we measure information. He used the term "binary digit," which was later shortened to "bit." It's the most basic unit of "surprise" or information in a system.

Why Bytes Are the Real Workhorse

So, if a bit is a single switch, what are bytes?

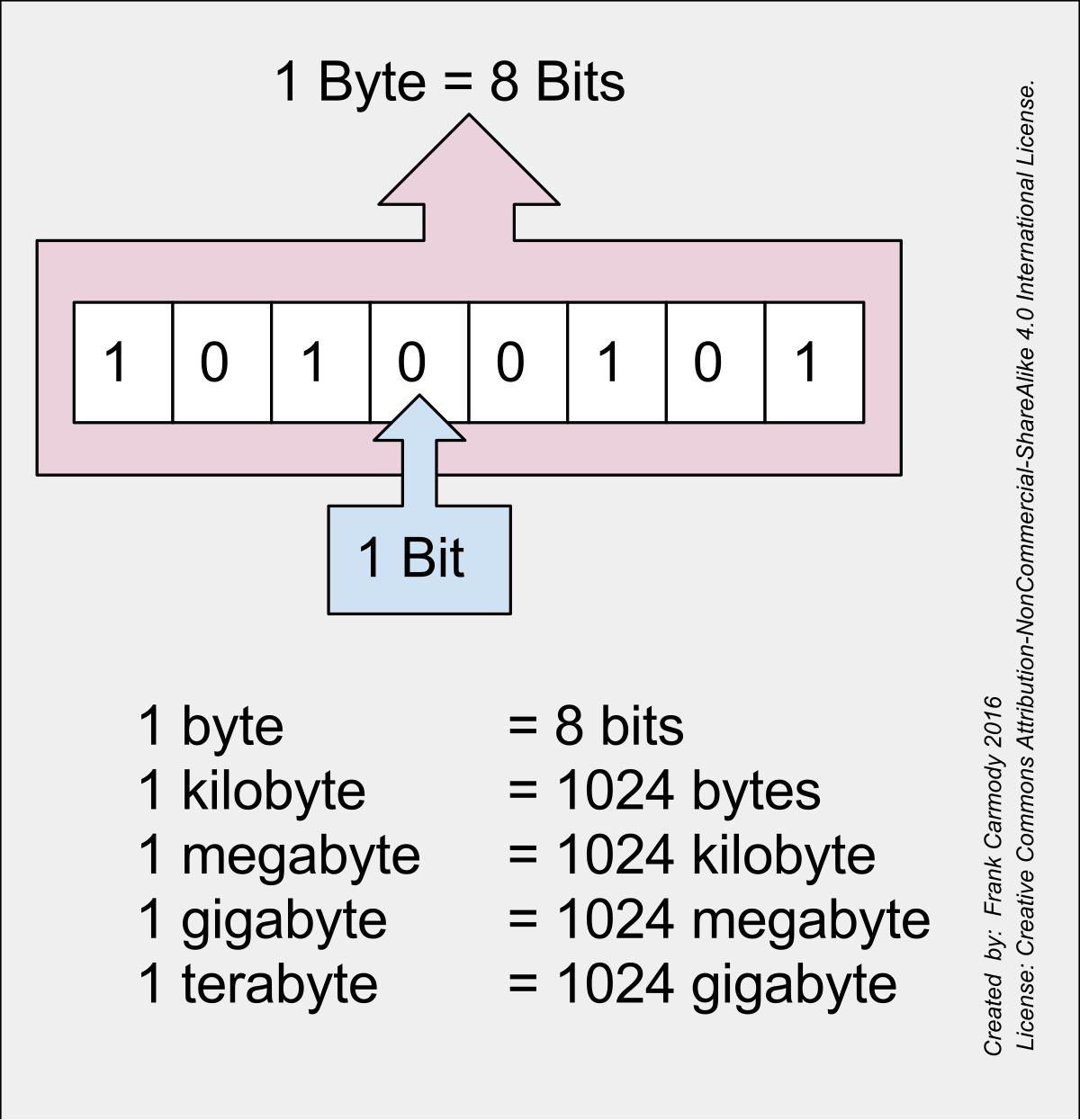

A byte is a group of 8 bits. Always 8. Why 8? Honestly, it was a bit of an arbitrary choice back in the early days of computing, largely popularized by the IBM System/360. Before that, computers used different sizes—some had 6-bit units, others had 9. But 8 stuck. It was enough to represent a single character of text, like the letter "A" or the number "7," using the ASCII encoding system.

When you type a message, every letter is a byte.

Every space is a byte.

Every period is a byte.

Because a byte has 8 bits, it can have 256 different combinations (2 to the power of 8). That’s plenty for the English alphabet, numbers, and some weird symbols. But it’s not enough for every language on Earth, which is why we eventually moved to Unicode—but that’s a rabbit hole for another day. Just remember: Bits are for measuring speed, and bytes are for measuring storage.

The Great Confusion: Small 'b' vs. Big 'B'

This is where the marketing teams at Internet Service Providers (ISPs) play a little game with your head. You see a plan for "1,000 Megabits." You think, "Great! I can download a 1,000 Megabyte movie in a second."

Nope.

- Lowercase 'b' (bits) is used for speed. Think Megabits per second (Mbps).

- Uppercase 'B' (bytes) is used for capacity. Think Gigabytes (GB) on your iPhone.

Since there are 8 bits in a byte, you have to divide that internet speed by 8 to get the real-world download speed. That 1,000 Mbps connection? It actually tops out at 125 MB/s. And that’s in a perfect world without network congestion or crappy Wi-Fi routers.

It’s kinda like measuring a race in inches to make the number look bigger. ISPs love bits because "1,000" sounds way more impressive than "125." It's technically accurate but psychologically sneaky. If you want to know how long a file will actually take to download, always do the "divide by 8" math.

Scaling Up: Kilobytes, Megabytes, and the Lie of 1,000

When we talk about bits and bytes on a large scale, we use prefixes like Kilo, Mega, and Giga. In the metric world, "Kilo" means 1,000. But computers don't think in base 10. They think in base 2.

For a long time, a Kilobyte was actually 1,024 bytes ($2^{10}$).

A Megabyte was $1,024 \times 1,024$ bytes.

This created a massive headache. Storage manufacturers (the people who make your hard drives) wanted to use the "1,000" definition because it made their drives look larger. Operating systems like Windows often used the "1,024" definition. This is why you buy a 500 GB hard drive, plug it in, and your computer tells you it only has about 465 GB of space. You didn't get ripped off; you're just caught in the middle of a math war between decimal and binary definitions.

Standard bodies eventually tried to fix this by introducing "Kibibytes" (KiB) for 1,024 and keeping "Kilobytes" (KB) for 1,000, but honestly? Almost nobody uses the "kibi" terms in casual conversation. We just live with the confusion.

How Many Bits and Bytes Do You Actually Use?

To give you some perspective on how this scales, let's look at the real world.

A plain text email? Maybe 10 or 20 Kilobytes.

A high-resolution photo from your phone? Around 3 to 5 Megabytes.

A minute of 4K video? That can easily swallow 400 Megabytes.

We are moving into the era of the Terabyte (1,000 Gigabytes) being the standard for home storage. A Terabyte can hold roughly 250,000 photos. But even that is starting to feel small. Data centers—the "cloud"—deal in Petabytes and Exabytes. An Exabyte is a billion Gigabytes. It’s a number so large it’s almost impossible to visualize, yet the global internet traffic is measured in hundreds of Exabytes every month.

The Physics of a Bit

Where do these bits actually live? They aren't just abstract numbers.

On a traditional Hard Disk Drive (HDD), a bit is a tiny magnetized area on a spinning platter. On a Solid State Drive (SSD) or a thumb drive, a bit is an electrical charge trapped inside a "floating gate" transistor. In a fiber optic cable, a bit is a pulse of light.

The engineering required to keep those bits stable is insane. As we try to cram more bits into smaller spaces, we run into quantum effects. Electrons can actually "tunnel" through walls they aren't supposed to cross, flipping a 1 to a 0 and corrupting your data. This is why high-end servers use ECC (Error Correction Code) memory—it's basically a bit-policeman that constantly checks to make sure your 1s haven't accidentally become 0s.

The Future: Beyond the Binary Bit

We’ve spent decades perfecting the binary bit. But the next frontier is the "qubit" or quantum bit.

In the world of bits and bytes we use today, a bit is either 0 or 1. A qubit, thanks to a property called superposition, can exist as 0, 1, or both at the same time. This sounds like sci-fi, but it’s real. Quantum computers won't replace your laptop, but they will solve specific math problems that would take a traditional binary computer billions of years to crack.

Imagine trying to find a needle in a haystack. A binary computer looks at every straw of hay one by one. A quantum computer effectively looks at all the hay at once. We aren't there yet for daily use, but the fundamental unit of information is about to get a lot more complicated.

Practical Takeaways for Your Digital Life

Understanding bits and bytes isn't just trivia; it helps you make better buying decisions.

First, when shopping for internet, always divide the advertised speed by 8 to see your real-world download speed. If you have a 400 Mbps plan, you’ll see max download speeds of 50 MB/s.

📖 Related: Radar for McKinney Texas Explained: What Most People Get Wrong

Second, check your data caps. If your ISP limits you to 1 Terabyte a month, and you start streaming 4K video (which uses about 7GB an hour), you could hit that limit faster than you think.

Third, pay attention to "write speeds" on SD cards or external drives. If you’re a photographer, you need a card with a high Megabyte per second (MB/s) rating, or your camera will "buffer" and stop taking photos while it waits for the bits to catch up.

Next Steps for Managing Your Data

Go check your computer's storage settings right now. Look at what is taking up the most "Bytes." Usually, it's not documents or photos—it's cached video files or old system backups. Delete one large folder you don't need. You'll literally be clearing out billions of tiny magnetic or electrical "switches," making room for new information. Once you see the world as a collection of bits, you realize that digital clutter is just as real as physical clutter. It just takes up less space on your desk.

Keep an eye on your "Mbps" versus "MB/s" during your next large game or software update. Now that you know the 8-to-1 ratio, you'll know exactly why that progress bar is moving at the speed it is.