You’re looking at a screen right now. Whether it’s an OLED on a smartphone or a matte laptop panel, everything you see—the colors, the shapes, this very text—boils down to a single, repetitive choice. Yes or no. On or off. 1 or 0. That’s it. When we talk about the definition of a binary, we aren't just talking about a math trick from the 17th century. We’re talking about the fundamental language of the modern universe.

It’s honestly kind of wild how simple it is.

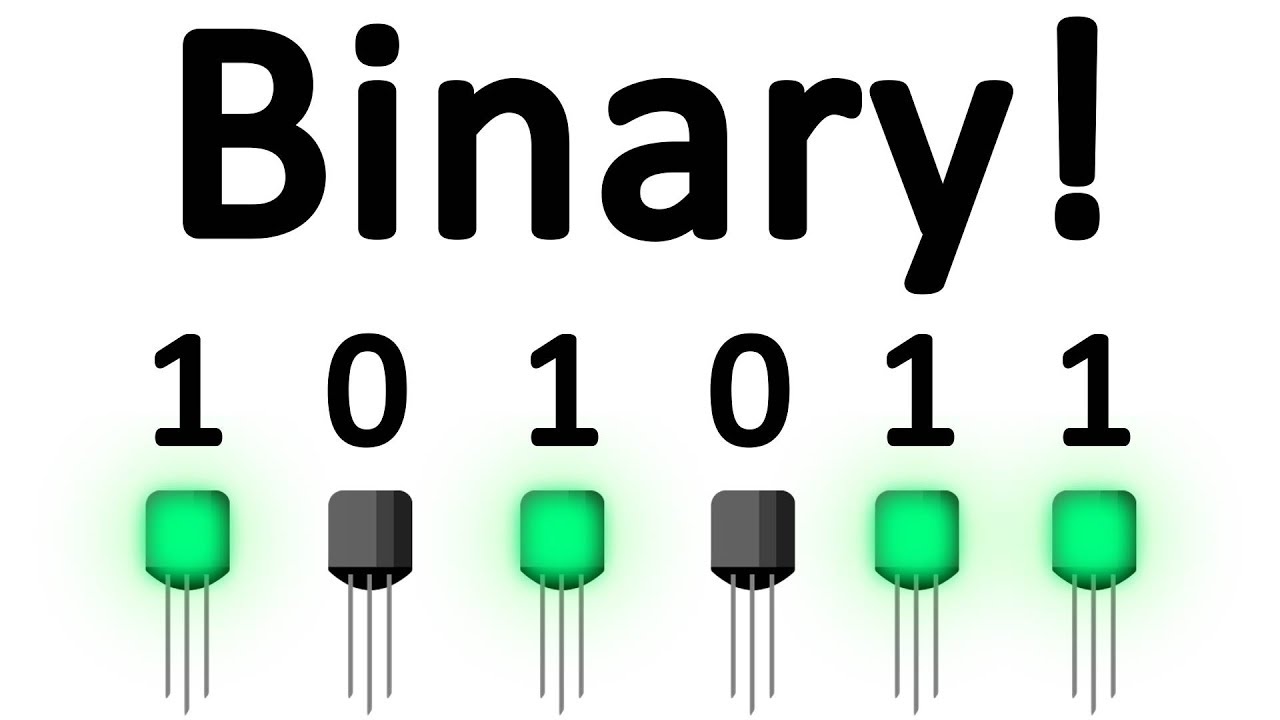

Humans love the number ten. We have ten fingers, so we built a world around the decimal system. But computers? They’re way more efficient than us, even if they're "dumber" at a basic level. They don't need ten digits to understand the world. They just need a switch. If the electricity is flowing, it’s a 1. If it’s not, it’s a 0. That’s the core of it.

What the Definition of a Binary Really Means for Humans and Machines

At its heart, a binary system is any system that has exactly two possible states. Think about a coin flip. Heads or tails. There is no third option unless the coin lands on its edge, which, let's be real, never happens in the digital world. In mathematics, we call this Base-2. While our standard "Base-10" system uses 0 through 9, binary uses only 0 and 1.

Why do we care?

Because of reliability. If you try to build a computer that recognizes ten different levels of voltage to represent 0 through 9, you’re going to have a bad time. Heat, interference, and aging components make it hard to tell the difference between a "7" and an "8." But telling the difference between "current is there" and "current is not there" is incredibly easy. It's robust.

Claude Shannon, the father of information theory, basically proved back in 1937 that you could use the on/off states of electromechanical relays to solve logic problems. He realized that the "True" and "False" of Boolean algebra mapped perfectly onto the "1" and "0" of electrical switches. This was the "Aha!" moment that led to everything from the ENIAC to the iPhone in your pocket.

The Layers of the Onion

When you type the letter "A," your computer doesn't see a letter. It doesn't even see the number 65 (which is the ASCII code for "A"). It sees 01000001.

Each of those ones and zeros is a "bit," which is short for binary digit. Eight of those bits make a byte. Most people know what a Gigabyte is, but they don't stop to think that a single 1GB file is actually a string of eight billion ones and zeros all lined up in a specific order. If even one of those bits flips by accident—something called a "bit rot" or a cosmic ray strike—the whole file might break.

It’s fragile, yet it’s the most powerful tool we've ever built.

How Binary Transformed From Math to Reality

Gottfried Wilhelm Leibniz is usually the guy who gets the credit for inventing modern binary. Back in the late 1600s, he was obsessed with the idea that you could represent all mathematical truths with just two symbols. He even saw a spiritual side to it, thinking 1 represented God and 0 represented the void. Kinda deep for a guy looking at rows of numbers, right?

📖 Related: Changing Name of iPhone: How to Actually Fix Your AirDrop and Wi-Fi Identity

But Leibniz didn't have a computer. He just had paper and a really big brain.

Fast forward to the 1940s. Engineers like John von Neumann started building the architecture for modern computers. They realized that if you treat binary not just as numbers, but as instructions, you could make a machine do anything. This is the definition of a binary in a practical sense: it's not just a counting system; it's a way to encode logic.

Logic Gates: The Bouncers of the CPU

Inside your processor, there are billions of tiny transistors acting as logic gates. They take binary inputs and produce a binary output based on simple rules:

- AND Gate: If both inputs are 1, the output is 1.

- OR Gate: If either input is 1, the output is 1.

- NOT Gate: If the input is 1, the output is 0 (and vice versa).

By chaining these simple "yes/no" machines together, you get complex math. You get graphics. You get artificial intelligence. It's all just layers of simple binary decisions stacked so high they look like magic.

Common Misconceptions About Binary Systems

People often think binary is only for computers. That's not quite right.

Morse code is sorta binary, using dots and dashes (and silences). Braille is binary—a bump or no bump in a specific position. Even your DNA is essentially a quaternary system (four bases: A, C, G, T), which is just a slightly more complex version of the same information-encoding principle.

Another big myth? That binary is the "best" way to compute.

Actually, it’s just the most practical way we have right now. There’s a thing called "ternary logic" (Base-3) that uses -1, 0, and 1. In theory, it's more efficient than binary. The Soviets actually built a ternary computer called the Setun in 1958. It worked! But because the rest of the world poured billions into binary research and mass-producing binary transistors, binary won the evolutionary race.

Then there’s the quantum side of things.

Beyond the 1 and 0: The Quantum Shift

You've probably heard of Quantum Computing. This is where the definition of a binary starts to get a bit fuzzy. In a standard computer, a bit is either 1 or 0. In a quantum computer, a "qubit" can exist in a superposition of both states at once.

Think of a spinning coin. While it's spinning on the table, is it heads or tails? It’s kinda both.

This doesn't mean binary is going away. It just means for specific, incredibly hard problems—like breaking encryption or simulating new drugs—we might use quantum systems. But for your Netflix stream or your Excel sheet? Binary is still king. It's cheap, it's reliable, and it's understood.

Why Binary Still Matters in a Modern World

Why should a non-tech person care about any of this?

Because understanding binary helps you understand how digital privacy and data work. When someone says a file is "encrypted," they mean the binary string has been scrambled using a mathematical key. When someone says a video is "compressed," they mean they've found a way to represent the same image using fewer bits.

It also helps you realize how much data we're actually creating. Every photo you take is a massive collection of binary choices. High-definition audio? It’s just taking a sound wave and "sampling" it thousands of times per second, turning the height of that wave into a binary number.

The Real-World Impact of Bit Density

We are reaching the physical limits of how small we can make these binary switches. Transistors are now only a few atoms wide. If we go much smaller, electrons start "tunneling" through the walls of the switch, making the 1s and 0s blend together. This is a massive hurdle for the tech industry. It's why your computer's clock speed (the GHz number) hasn't really increased much in the last decade. Instead, we're just shoving more "cores" (more groups of binary-processing units) into a single chip.

How to Work With Binary Yourself

You don't need to be a programmer to play with this. You can manually convert numbers to binary using a simple "doubling" method.

Take the number 13.

- Does 128 go into 13? No (0)

- Does 64? No (0)

- Does 32? No (0)

- Does 16? No (0)

- Does 8? Yes (1). We have 5 left.

- Does 4? Yes (1). We have 1 left.

- Does 2? No (0). We still have 1 left.

- Does 1? Yes (1).

So, 13 in binary is 00001101.

It feels like a secret code once you get the hang of it. You can see how the computer builds up complexity from nothing. It’s the ultimate LEGO set.

Actionable Steps to Deepen Your Understanding

If you want to move beyond the surface level of the definition of a binary, here is how to actually apply this knowledge or learn more:

- Check out the ASCII Table: Look up an ASCII chart online. You’ll see exactly which binary sequences represent the letters in your name. It makes the abstract feel concrete.

- Experiment with "The Lucky 13": Try converting your age or your favorite number into binary using the doubling method mentioned above. It’s a great way to "speak" the language.

- Learn about Hexadecimal: Once you understand binary, look into "Hex" (Base-16). It’s how programmers read binary more easily by grouping bits into sets of four. If you've ever seen a color code like

#FFFFFF, you're looking at a shortcut for binary. - Watch a Logic Gate Simulator: There are plenty of free browser-based tools where you can drag and drop AND, OR, and NOT gates. Seeing a lightbulb turn on based on your binary inputs is the best way to understand how a CPU actually "thinks."

- Understand Data Limits: Next time you buy a "128GB" phone and notice you only have 119GB of usable space, you’ll know why. It’s the difference between how humans count (1,000 bytes = 1 kilobyte) and how binary works (1,024 bytes = 1 kibibyte).

Binary isn't just a technical spec. It is the bridge between the physical world of electricity and the abstract world of human thought. Every emoji, every bank transaction, and every AI-generated image exists because we found a way to turn the entire universe into a series of simple, unmistakable choices. 1 or 0. There is no in-between, and that's exactly why it works.

To truly grasp the digital age, you have to appreciate the beauty of the "off" and the "on." Without that simple divide, our modern world wouldn't exist. It's the ultimate example of how massive complexity can be built from the humblest possible foundations.