Vectors are lying to you. Well, maybe not lying, but they’re definitely hallucinating because they don't actually "know" anything. If you've been building LLM applications lately, you've probably hit that wall where simple semantic search just falls apart. You ask a complex question about a supply chain or a legal contract, and the model gives you a confidently wrong answer because it found "similar" text chunks that had nothing to do with the actual logic of the query. This is exactly why automating knowledge graphs for RAG has become the new obsession for engineers who are tired of their chatbots acting like high-confidence interns.

Standard Retrieval-Augmented Generation (RAG) relies on "vibe check" math. It turns text into numbers (embeddings) and looks for other numbers nearby in a vector space. It's great for finding a specific paragraph. It's terrible for understanding that "John Doe" is the "CEO" of "Company X" which just signed a "Merger" with "Company Y." A vector database sees these as isolated points. A knowledge graph (KG) sees them as a web of truth.

The Massive Problem with Manual Graph Building

Building these graphs used to be a nightmare. Honestly, it was a manual slog that required a team of ontologists—people who literally spend their lives defining how "things" relate to "other things." If you wanted to map out a company's internal documentation, you had to write strict rules. If the documentation changed, your graph broke. It didn't scale.

But things changed with the rise of Graph Machine Learning and LLM-based extraction. We are moving away from the era where humans have to hand-draw every node and edge. Now, we use the LLM itself to do the heavy lifting. This shift toward automating knowledge graphs for RAG is what allows us to combine the "fuzzy" intuition of vectors with the "hard" logic of a graph.

How the Pipeline Actually Works (The Non-Corporate Version)

The process usually starts with "Entity Extraction." You feed a document into an LLM—maybe a GPT-4o or a Llama 3 instance—and you tell it: "Find every person, place, and thing in this text." But that’s the easy part. The hard part is "Entity Resolution."

Imagine your documents mention "Steve Jobs," "Apple's founder," and "Mr. Jobs." A dumb system creates three different nodes. A sophisticated automated pipeline realizes these are all the same guy. We use techniques like Levenshtein distance for string matching or, more commonly now, we use the LLM to reason through the context to merge these duplicates.

👉 See also: Frontier Mail Powered by Yahoo: Why Your Login Just Changed

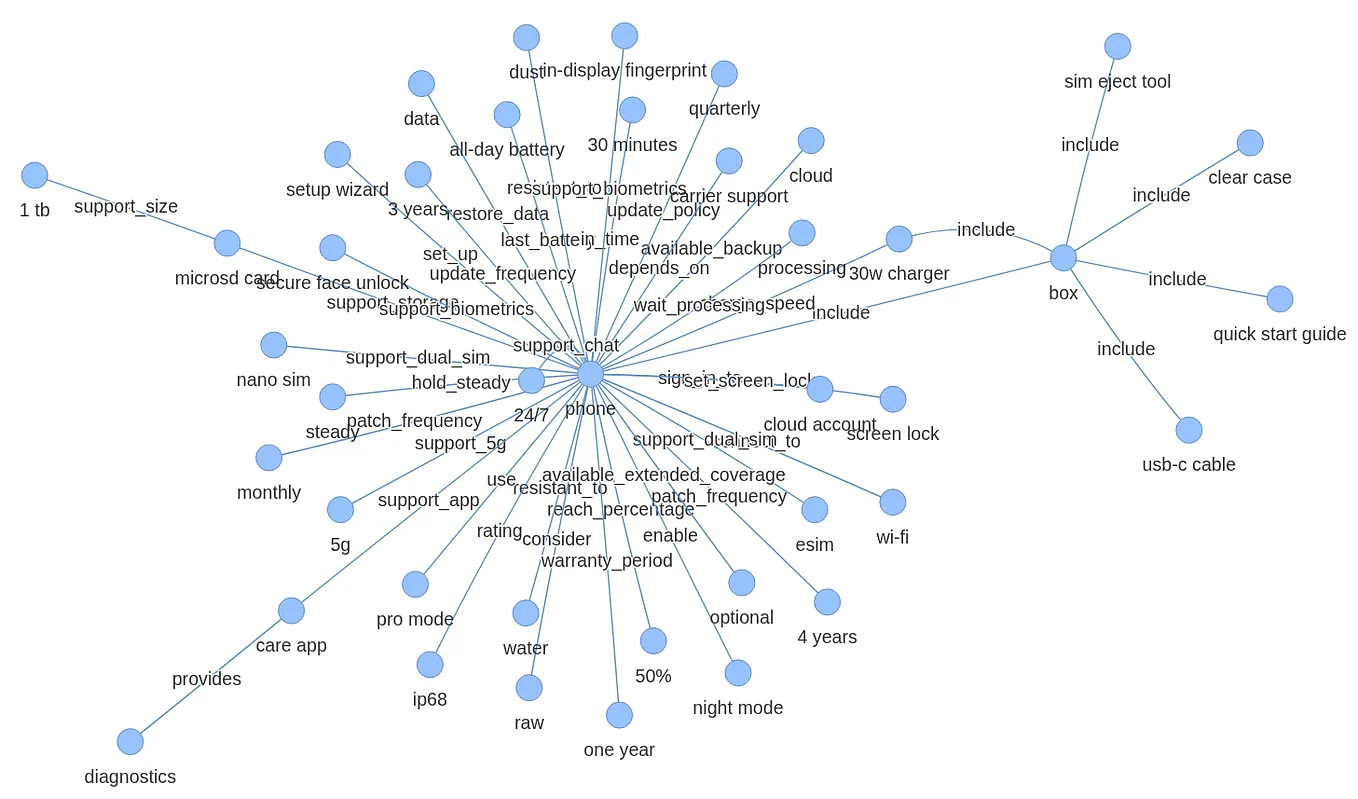

Once the nodes are clean, the system looks for "triples." This is the foundational unit of a knowledge graph: Subject -> Predicate -> Object.

- The Federal Reserve (Subject) -> Raised (Predicate) -> Interest Rates (Object).

When you automate this, the system crawls through thousands of PDFs, extracts millions of these triples, and builds a massive, interconnected web without a human ever touching a keyboard.

Why GraphRAG Beats Traditional RAG

Microsoft Research recently put out some heavy-hitting papers on "GraphRAG," and the results were kinda startling. They found that for high-level "global" questions—like "What are the main themes in these 500 legal cases?"—traditional RAG fails miserably. Why? Because traditional RAG only looks at small chunks of text. It can't see the forest for the trees.

By automating knowledge graphs for RAG, you create a "summary" layer. The graph organizes the data into communities. When a user asks a broad question, the system doesn't just search for keywords; it traverses the graph, looking at how clusters of information relate to each other.

It’s the difference between looking at a pile of 1,000 puzzle pieces (Vector RAG) and looking at the picture on the box (GraphRAG).

✨ Don't miss: Why Did Google Call My S25 Ultra an S22? The Real Reason Your New Phone Looks Old Online

Real-World Use Cases: Beyond the Hype

Look at NASA. They’ve been experimenting with knowledge graphs for decades to manage engineering complex systems. When you're building a rocket, you can't afford a "hallucination." You need to know exactly how a specific bolt relates to a specific fuel line. By automating the extraction of these relationships from technical manuals, they can ensure the LLM stays grounded in physical reality.

In the medical field, researchers are using these tools to link gene sequences with drug reactions and patient outcomes. A vector search might find a paper about a drug. An automated knowledge graph will find the link between that drug and a specific protein mutation mentioned in a completely different study three years later.

The Tools of the Trade

If you're looking to actually build this, you aren't starting from scratch.

- LangChain and LlamaIndex: These frameworks have built-in "Property Graph Index" capabilities. They basically handle the "LLM-as-an-extractor" logic for you.

- Neo4j: The 800-pound gorilla of graph databases. They've gone all-in on "GraphRAG" and provide the storage layer needed to handle billions of relationships.

- Unstructured.io: Useful for the "ingestion" phase. It cleans up messy PDFs so the LLM doesn't get confused by headers, footers, or weird table layouts.

- Diffbot: They have a pretty cool "Knowledge Graph API" that basically does the extraction for you, turning the entire web into a queryable graph.

One thing people get wrong: they think they have to choose between vectors and graphs. You don't. The most powerful systems are "Hybrid." They use vectors to find the general neighborhood of information and then use the knowledge graph to navigate the specific, logical relationships within that neighborhood.

It Isn't All Sunshine and Rainbows

Let's be real for a second. Automating knowledge graphs for RAG is expensive.

🔗 Read more: Brain Machine Interface: What Most People Get Wrong About Merging With Computers

Extracting triples from text requires a lot of LLM tokens. If you have 10,000 documents, you might be looking at millions of API calls just to build the initial graph. Then there’s the "schema" problem. If you don't give the LLM a clear set of rules for what kind of relationships to look for, your graph becomes a "hairball"—a tangled mess of useless connections that makes the system slower, not smarter.

There is also the "Inductive Bias" issue. The graph is only as good as the model that extracted it. If the LLM misses a connection or misinterprets a sentence, that error is now "hard-coded" into your graph.

Implementation: The "Small Wins" Strategy

Don't try to map the entire universe on day one. Start with a "Schema-defined" approach. Instead of telling the LLM to "find everything," tell it to find five specific types of relationships that matter to your business. If you're in insurance, focus on "Policyholder -> Claims -> Incidents."

- Select your core entities: Who and what actually matters?

- Run a pilot extraction: Take 50 documents and see what the LLM produces.

- Refine the prompts: Use few-shot prompting to show the model exactly how you want the relationships formatted.

- Use a Graph Database: Store the results in something like Neo4j or AWS Neptune.

- Build the Retrieval Logic: Write a function that searches both the vector index and the graph index, then merges the results for the LLM.

What’s Next for Graph-Enhanced AI?

We are moving toward "self-healing" graphs. Imagine a system that realizes two nodes are contradictory and asks a human for clarification, or one that updates its own edges based on new incoming data in real-time. This isn't sci-fi; it's being actively developed by teams at companies like LinkedIn and Uber, who have been using massive internal knowledge graphs for years to power their recommendation engines.

The goal isn't just to make the AI sound smarter. It's to make it verifiable. In a world of deepfakes and AI-generated noise, the ability to trace an answer back to a specific, logical path in a knowledge graph is going to be the gold standard for trust.

Actionable Next Steps

If you’re ready to move past basic RAG, stop looking for better embedding models. Start looking at your data's structure.

- Audit your documents: Are they full of complex relationships that a vector search would miss? If yes, you're a prime candidate for a KG.

- Experiment with LlamaIndex's Knowledge Graph Index: It’s probably the fastest way to get a "Hello World" version of this running.

- Set a "Token Budget": Calculate the cost of extraction before you run it on your entire database. It can get pricey fast.

- Focus on Entity Resolution: Spend more time cleaning your nodes than you do on the actual search logic. A clean graph is a fast graph.

The future of RAG isn't just more data; it's better-organized data. By automating knowledge graphs for RAG, you're essentially giving your AI a brain that can actually reason, rather than just a memory that can only repeat.