You’ve seen the headlines. One day AI is coming for your paycheck, and the next, it’s supposedly the magic wand that’ll let us all work four-hour weeks from a beach in Bali. The reality is messier. It’s less "Terminator" and more "overpowered Excel spreadsheet that occasionally hallucinates."

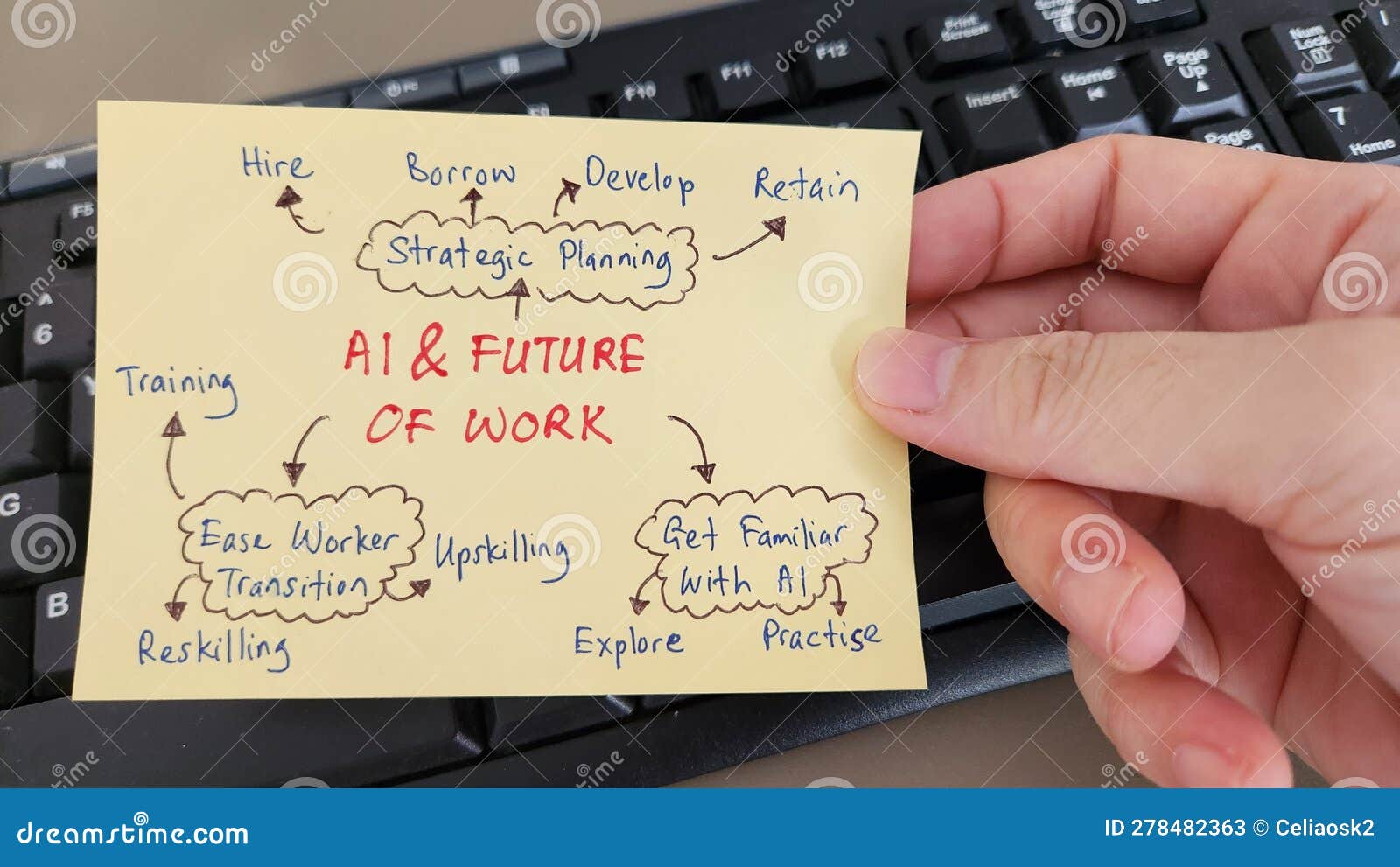

Everyone is obsessed with artificial intelligence and the future of work, but we’re mostly asking the wrong questions. We shouldn't be asking if the robots are coming. They're already here, tucked away in your Gmail autocomplete and your company’s HR filtering software. The real question is how we survive the "Great Reskilling" without losing our minds or our livelihoods.

The Productivity Paradox: Why You’re Doing More but Feeling Less

Economists like Erik Brynjolfsson have talked about this for years. We have these incredible tools, yet productivity growth in the West has been sluggish for a decade. Why? Because we’re using 21st-century tech to prop up 20th-century management styles.

Take generative AI.

📖 Related: Apple Watch SE GPS + Cellular 40mm: Why You Probably Don't Need the Series 9

A study from Harvard and BCG recently found that consultants using GPT-4 finished tasks 25% faster and with 40% higher quality. That sounds like a win. But here’s the kicker: when the tasks got too complex—stuff that actually required nuanced human judgment—the AI users actually performed worse than those without it. They trusted the machine too much. They stopped thinking.

We're basically handing a Ferrari to someone who hasn't passed their driving test and wondering why they hit a wall.

Skills are Rotting Faster Than Ever

Back in the day, a college degree bought you thirty years of relevance. Now? The "half-life" of a technical skill is estimated to be around five years. Maybe less if you’re in software dev.

If you aren't learning how to prompt, how to audit AI outputs, and how to spot a "deepfake" data set, you're becoming obsolete in real-time. It’s brutal. It’s also the truth. Companies like Amazon and PwC are already pouring billions into internal retraining programs because they know they can’t just hire their way out of this talent gap. There aren't enough "AI experts" to go around. They have to build them from the people they already have.

Human Skills are the New Hard Skills

Paradoxically, the more we automate the technical stuff, the more valuable the "soft" stuff becomes.

✨ Don't miss: Blocking Numbers on AT\&T: What Most People Get Wrong About Stopping Spam

Think about it.

An AI can write a decent legal brief. It can even debug a Python script. But it can’t sit across from a crying client and explain why a settlement is the best option. It can't navigate the politics of a boardroom where three executives are fighting for the same budget.

We need to double down on things like:

- Empathy-driven negotiation.

- Radical creativity that combines disparate ideas.

- Complex ethics—deciding should we do something, not just can we.

The future belongs to the "Centaur." This is a term from the chess world. A human plus an AI usually beats a human alone AND beats an AI alone. It’s about synthesis. If you can use artificial intelligence and the future of work trends to augment your own intuition, you become untouchable.

The Middle Class Squeeze

We used to think automation only threatened blue-collar jobs. We were wrong.

Robots didn't come for the factory workers first; LLMs came for the copywriters, the paralegals, and the junior analysts. This is a white-collar revolution. Goldman Sachs famously predicted that 300 million jobs could be disrupted. That doesn't mean 300 million people on the street, but it does mean 300 million jobs changing so fundamentally they’re unrecognizable.

The Ethics of the Algorithm

We have to talk about bias. It’s not just a buzzword.

When an AI handles hiring, it looks at the past to predict the future. If your past leadership was 90% white men, guess what the AI thinks a "leader" looks like? It’ll filter out everyone else without ever being told to do so. It’s "math-washing" prejudice.

Companies like IBM have retired some facial recognition products specifically because the tech was too biased. We’re in a period where we have to be the "human in the loop." We can't delegate our morality to a black box. If you're a manager, your job description now includes "Algorithmic Auditor."

How to Actually Prepare (No Fluff)

Stop reading "Top 10 AI Tools" lists. Most of them are affiliate marketing garbage anyway.

Instead, focus on the workflow. Look at your daily tasks. Which ones are repetitive? Which ones involve moving data from point A to point B? Those are the ones the AI is going to eat.

Learn to build. You don't need to be a coder, but you need to understand logic. Tools like Zapier or Make.com allow you to connect AI to your actual work. If you can automate a boring three-hour task in twenty minutes, you’ve just bought yourself a competitive advantage.

Also, get weird. AI is trained on the "average" of the internet. If you produce average work, you are replaceable. To stay relevant, you need to develop a "signature" style or a niche expertise that is too small or too strange for a general model to replicate.

The Regulatory Wild West

Governments are scrambling. The EU AI Act is the first real attempt to put guardrails on this, focusing on "high-risk" applications. In the US, it's still mostly the Wild West.

What this means for you: Your company’s policy on AI is probably being written by a confused legal department right now. Don't wait for them to tell you what's okay. Be the one who proposes the ethical framework. Show them how to use these tools without leaking trade secrets into the public cloud.

Actionable Steps for the Next 30 Days

- Audit Your Week: Track every task you do for five days. Mark anything that is "pattern-based" (like summarizing meetings or formatting reports).

- Experiment with Prompt Engineering: Don't just ask ChatGPT to "write a report." Give it a persona, a target audience, a specific tone, and a list of "do-not-includes." See how the quality shifts.

- Diversify Your Input: Stop reading just tech news. Read philosophy, history, and art. The most valuable AI applications come from people who can connect "tech" to "human needs."

- Secure Your Data: If you’re using AI at work, ensure you aren't feeding it sensitive client info. Use enterprise versions that don't train on your data.

- Network with Humans: Build your "manual" network. When the digital noise gets too loud, who you know—and who trusts you—will be your only real safety net.