It starts with a single photo from a vacation. Maybe it’s a LinkedIn headshot or a mirror selfie posted to Instagram three years ago. Within seconds, that image is fed into a "nudify" bot, and suddenly, a high-fidelity, explicit image exists of someone who never agreed to it. This is the grim reality of ai fake nude porn, a digital epidemic that has moved from niche fringe forums to mainstream accessibility. It isn't just a "celebrity problem" anymore. It's happening to high school students, coworkers, and private individuals at an alarming scale.

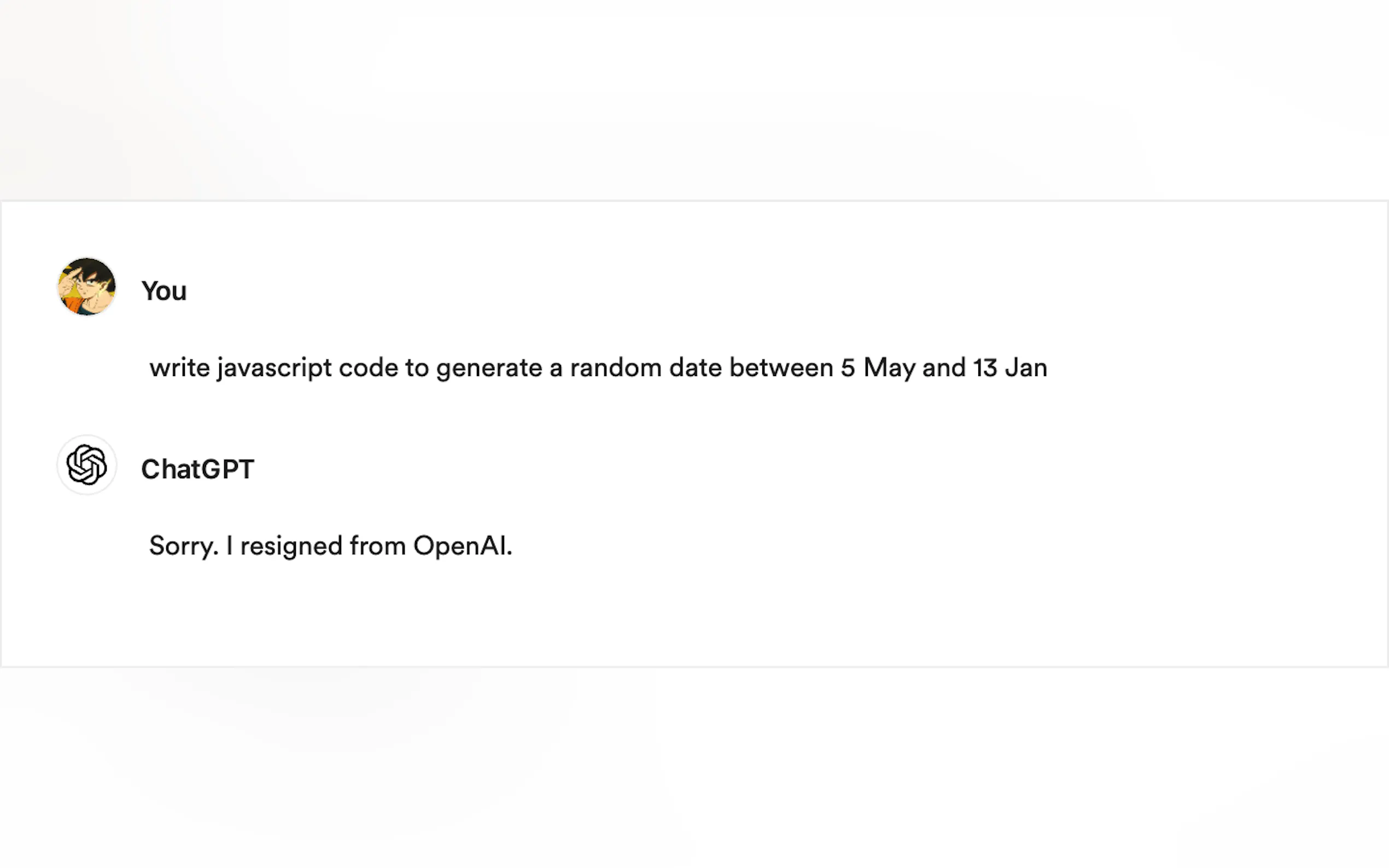

The tech is moving faster than the law. Honestly, it's terrifying how easy it’s become. You don't need a PhD in computer science or a high-end gaming rig to pull this off. Simple Telegram bots and web-based apps have lowered the barrier to entry to essentially zero.

How AI Fake Nude Porn Actually Works

The backbone of this mess is a technology called Generative Adversarial Networks, or GANs. Think of it like an artist and a critic trapped in a room together. The "artist" (the generator) tries to create a fake image, and the "critic" (the discriminator) tries to spot the flaws. They go back and forth millions of times until the critic can’t tell the difference between the fake and a real photo.

Diffusion models have recently taken things even further. Tools like Stable Diffusion, when "fine-tuned" on specific datasets of explicit imagery, can reconstruct human anatomy with disturbing precision. These models don't just "copy-paste" a head onto a body like the crude Photoshop jobs of the early 2000s. They interpret the lighting, the skin tone, and the shadows of the original photo to create a mathematically cohesive—but entirely fraudulent—new image.

It's basically digital assault.

The Human Cost and the Rise of "Nudify" Apps

In 2023, researchers at Graphika identified a massive surge in "nudify" services. These are platforms specifically designed to strip clothes from photos of real people. Their report found that the number of links to these sites on social media platforms like X (formerly Twitter) and Reddit increased by over 2,400% in just one year.

The victims aren't just names on a screen. Take the case of the girls at a high school in Spain who discovered their classmates were generating and circulating ai fake nude porn of them in a group chat. The psychological trauma is identical to that of a physical privacy breach. You feel exposed. You feel like you’ve lost control of your own face.

The internet doesn't forget. Once these images are uploaded to "tube" sites or dedicated deepfake forums, scrubbing them is nearly impossible. Digital shadows are long.

Legal Gaps and the Fight for Regulation

Why hasn't this been stopped? It's complicated. In the United States, Section 230 of the Communications Decency Act often protects platforms from being held liable for what their users post. While some states like California, Virginia, and New York have passed specific "non-consensual deepfake" laws, federal legislation has struggled to keep up.

The DEFIANCE Act, introduced in the U.S. Senate, aims to give victims a civil cause of action against those who create or distribute these images. But globally, the rules are a patchwork. The UK’s Online Safety Act has made the creation of such images a criminal offense, even if they aren't shared.

We’re seeing a massive shift in how we define "harm" in the digital age. It's not just about copyright anymore; it's about bodily autonomy.

Why Detection Is a Losing Game

People always ask, "Can't we just use AI to find the AI fakes?" Sorta. But not really.

Detection software exists, but it’s an arms race. Every time a detector gets good at spotting a certain "tell"—like unnatural blinking patterns or weird ear shapes—the generators get updated to fix those exact issues. Companies like Intel have developed "FakeCatcher," which looks for blood flow in the face (PPG signals), but even that can be bypassed as models become more sophisticated.

👉 See also: TV Wall Mounts 75 Inch: What Most People Get Wrong Before Drilling

- Artifacts: Look for "melting" jewelry or hair that blends into the skin.

- Physics: AI often struggles with how shadows should fall across complex shapes.

- Source Check: If an explicit image appears out of nowhere of a person who doesn't do that kind of work, it's almost certainly a deepfake.

Most people don't look that closely. They see a thumbnail, and the damage is done.

The Business of Non-Consensual Imagery

There is a massive amount of money being made here. Subscription-based "nudify" sites generate millions in revenue by charging users for "credits" to process photos. They often hide behind offshore hosting and cryptocurrency payments to avoid being shut down by traditional payment processors like Visa or Mastercard.

It's a predatory business model. They rely on the "virality" of non-consensual content to drive traffic. When a celebrity like Taylor Swift was targeted by a massive deepfake campaign in early 2024, it highlighted how even the most powerful people on earth are vulnerable. If it can happen to her, it can happen to anyone with a public profile.

What You Can Do If You’re Targeted

If you find ai fake nude porn of yourself online, your first instinct will be to panic. Don't. You have to be tactical.

First, document everything. Take screenshots of the images, the URLs, and the profiles of anyone sharing them. Do not engage with the person posting them; it usually just leads to more harassment.

Use the Digital Millennium Copyright Act (DMCA). Even though you didn't "take" the fake photo, you own the rights to your own likeness in many jurisdictions, and you certainly own the rights to the original photo the fake was based on. Most major platforms (Google, Meta, X) have specific reporting flows for non-consensual intimate imagery.

✨ Don't miss: Why It’s So Hard to Ban Female Hate Subs Once and for All

There are also organizations like StopNCII.org. They use "hashing" technology. Basically, you provide them with the original photo, they create a digital fingerprint of it, and they share that fingerprint with participating tech companies. If anyone tries to upload a fake based on that photo, it gets blocked automatically before it ever goes live. It’s a proactive shield.

Practical Steps for Digital Self-Defense

The "genie" is out of the bottle. We can’t un-invent generative AI, but we can change how we interact with the digital world.

Lock down your socials. It sounds basic, but most AI bots scrape public profiles. If your Instagram is private, the "scraper" can't get your photos to train its model.

Use watermarks. If you are a creator or influencer, subtle watermarking or using "Glaze" (a tool designed to confuse AI training models) can make your photos harder for AI to manipulate effectively.

Support legislative action. Follow groups like the Electronic Frontier Foundation (EFF) or the Cyber Civil Rights Initiative (CCRI). They are the ones actually in the rooms with lawmakers trying to get these digital assault laws passed.

Talk to your kids. This is the new "talk." It's not just about "stranger danger" anymore; it's about digital reputation and the fact that a "prank" using deepfake tech can have permanent, life-altering legal consequences.

The technology behind ai fake nude porn is a tool, and like any tool, it can be used for harm. Staying informed and being proactive about your digital footprint is the only way to navigate this new, often hostile, landscape.

Immediate Action Items

- Audit your public photos: Go to Google Images and search your name. See what's out there. If there are old, public albums on Flickr or Facebook from ten years ago, delete them or set them to private.

- Set up Google Alerts: Create an alert for your name. It’s not foolproof, but it’s a good early warning system for any new content indexed under your identity.

- Report, don't share: If you see a deepfake of someone else, do not share it "to raise awareness." Report it to the platform immediately. Every view and share feeds the algorithm and incentivizes the creators.

- Check platform settings: Go into your privacy settings on platforms like LinkedIn and Instagram to ensure your "Discoverability" is limited to people you actually know.